091 #makeselfie

A workshop and hackathon project on self-tracking for MakeZurich 2023.

Biometrics are, in one word, how we may define any sensors that measure our biological state. Commonly used to describe identity verification through fingerprints or "face unlocks", they could be anything from our temperature and pulse, to gesture or facial recognition - almost any part of our body and behavior. Can we control this, learn about this, with a wearable badge? What does it mean to us to take a selfie with our data? This post explores some ideas in this direction from a project at MakeZurich 2023.

TL;DR

These are my notes from my workshop at Bitwäscherei and hackathon at ewz Oerlikon, during which we met to set up and do projects with the MakeZurich badge and various sensors. At the workshop, I plugged in a Pulse Sensor to collect some basic biometrics over time and start some quantified selfie experiments. Later that week, Federico and I worked on a quick prototype called MakeSelfie, which we presented in the MakeZurich pitches. The rest of the post shares a few kilobits more of background. Check out all the MakeZurich 2023 results at now.makezurich.ch

Open Lab Week

After the MakeZurich kickoff where we heard about the 5 challenges for this year's IoT community hackathon, there was a week of Open Lab nights at the Bitwäscherei (a.k.a. MechArtLab) in Zürich. During my workshop there on June 7, we discussed how to Make our own 'data selfies' with biometric sensors, quickly visualize and share signals on our online platforms, and reach for the stars 🌠 inside.

During this hybrid meeting, which a few people joined over Jitsi, while others gathered around the makerspace table - I shared a little bit of history of my involvement in electronics tinkering. This is recounted further down. Here are some fun ideas for a quantified selfie that came out of our workshop:

- Measure device energy ratings, power consumption, or charge levels.

- Walk around the park to make unique GPS art based on your movements.

- Use a Gesture sensor to emoji-message by waving your hands in the air.

- Grab PAX counter blips to create an imprint of our invisible.

- Make neat visualizations with just our TTN timestamps.

.. add your own here! (GitHub)

Starting with early Science Fairs in the 90's in Canada, where I got to work with extremely talented Makers, my interest was bolstered by the open hardware community in the U.S. and U.K., whose platforms (like Pachube/Xively), hackathons and hackerspaces I was quite enthusiastic about. Since then, I started participating and running IoT-related hackathons in Switzerland, like the Open Farming Hackdays in Liebegg, TTN Hacknights in Bern, and of course, the MakeZurich editions.

This community has had a long fascination with the "quantified self" movement. Many people have engaged in consistently measuring their own surroundings and bodies, decades before wearables became a fad. Some of us have installed "dash cams" on our vehicles, or even worn them around our necks - collecting images and location data for years. DIY wearables based on compact boards like the Calliope are great as learning devices.

In fact, the first MakeZurich badge was based on the BBC Micro Bit - designed for schools, and easy to hack into your shoes, or whatever. Every subsequent edition of the event featured a flashy new wearable badge. You can hear about the history and design efforts behind this in Thomas Ambergs presentation. See also #BadgeLife and Hackaday for glimpses of similar projects world-wide.

This convergence of taking data into your own hands, and applying it to better understand your world, or at least your own body, has a long history. About a decade ago, the Quantified Self movement (linked to much earlier practices, from self-testing to sousveillance), was spearheaded in my view by pioneers like Nicholas Feltron - the information designer and creator of the Daytum app who beautifully charted his own life and that of his father - or like MIT engineer Lam Vo, who writes:

We have become the largest producers of data in history. Almost every click online, each swipe on our tablets and each tap on our smartphone produces a data point in a virtual repository. According to an MIT study, the average office worker produces 5GB worth of data each day. This data has been deployed by politicians, marketers and government departments to do anything from solving crimes to sending personalized political messages to selling us products. But there are living, breathing humans behind these spreadsheets. We took actions to create these data points. Perhaps we can use them for self exploration.

-- Quantified Selfie (emphasis mine)

As I wrote in an earlier blog post, ideas I have worked on over the past few years ranged from helping to understand public spaces through citizen monitoring, to aggregating ActivityWatch data from my computing environment into playful animations:

My heart on my sleeve

Today, we often leave the quantifying to our smart watches - a somewhat painful topic in Switzerland, one should add. I have become appreciative of the quirks in my zombie Rebble/Pebble, while others in the Maker community are fans of grassroots projects like the Watchy. Some install privacy-protecting open source software like Gadgetbridge, others upgrade the firmware of cheap wearables, some are even 3D-printing their own wrist- or belt-worn devices.

Tip: follow the new community smarterwat.ch for advice on open wearables.

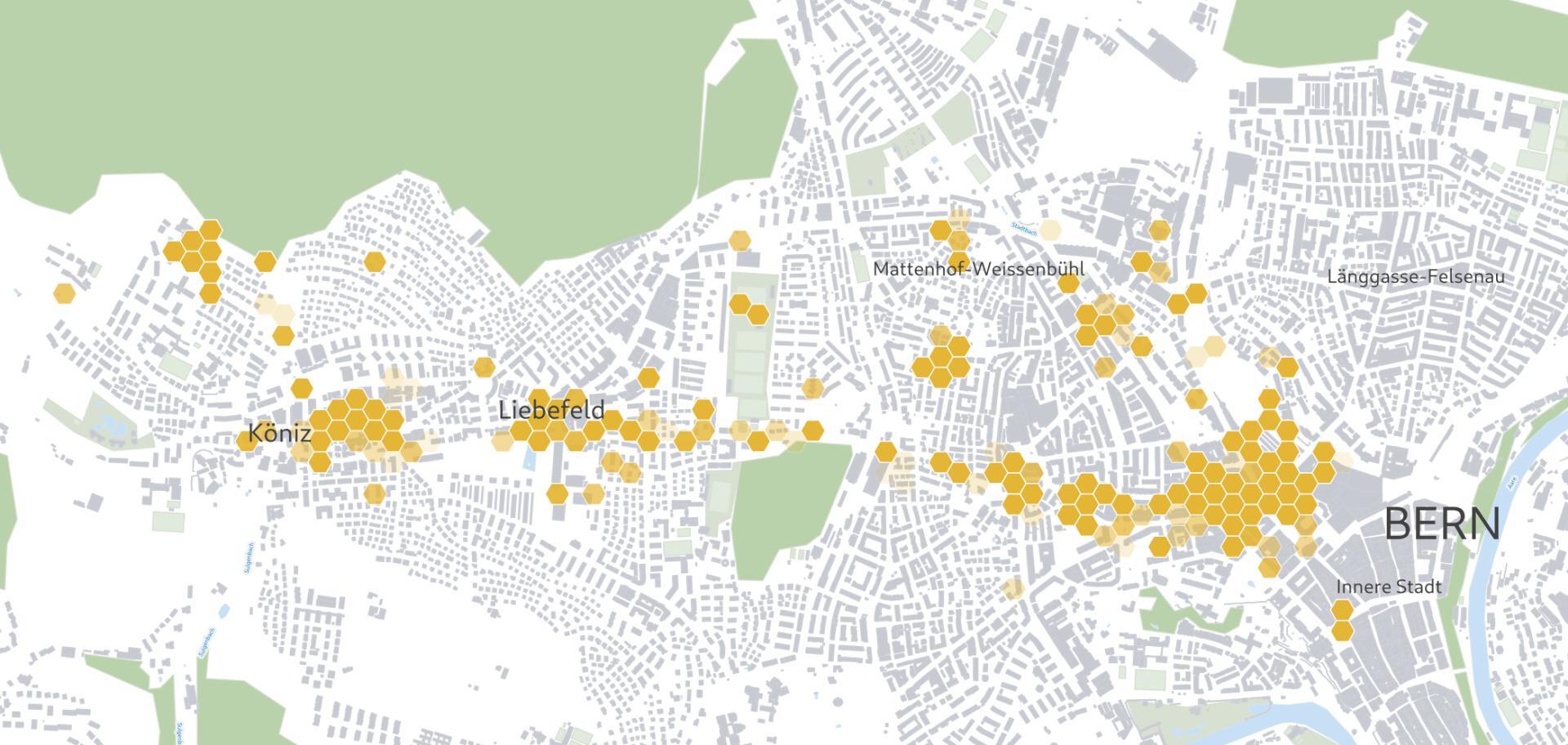

Of course, besides wearables, we also have lots of potential for self-tracking in the devices that help us get around town. From the mobile phone (see POSMO) to our bicycles, we can create maps of spatial footprints - here in aggregate view, made with my Velotracker node connecting to open gateways of The Things Network in the Bern area over the course of several months of rides:

It is at this point, when we take the means of data production into our hands, that we become more than just passive residents, but active citizens. Not just being run-over by smart city measures, but "painting in" our surroundings with data managed and shared on our own terms.

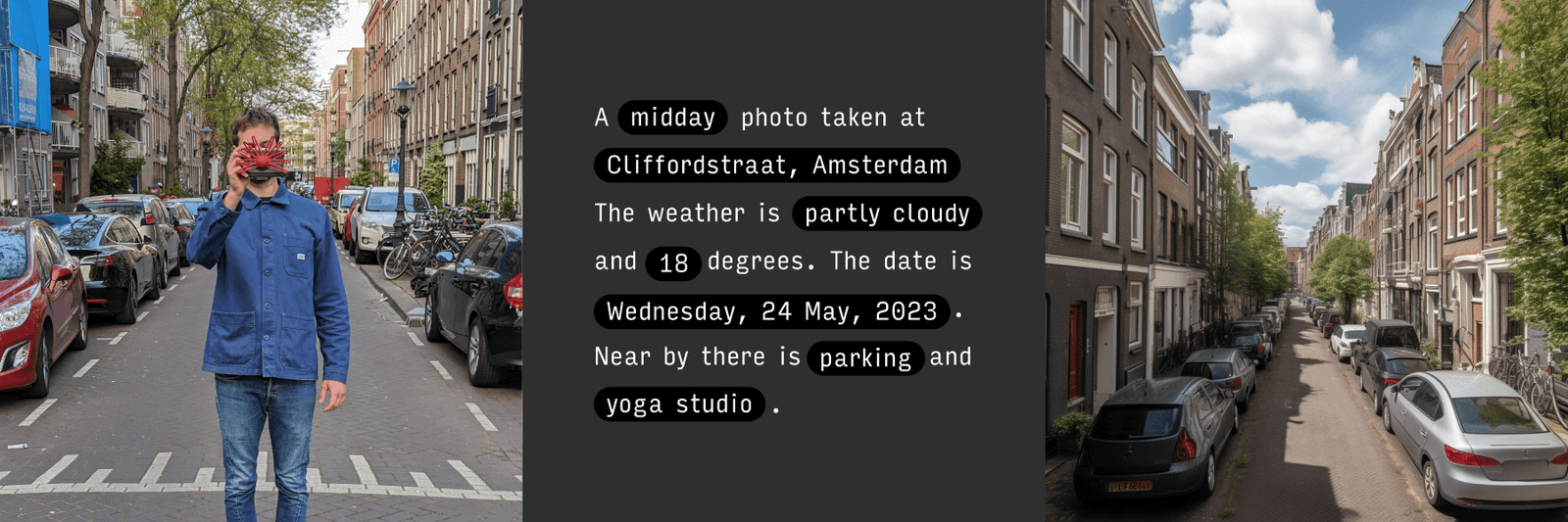

Today, we are experiencing many breakthroughs on the verge of using personal data to filter through massive databases with machine learning, as well as more powerful edge devices. The lines between personal experience and physical reality are becoming even more blurred with A.I. Take this recent project by Bjørn Karmann for starters - Paragraphica (below) is a handheld device that generates street photography using only geolocation and deep learning - a "blind" camera that hallucinates onto your frames.

And so, we tried in our discussion at the recent hackathon to extend these ideas, imagining a "selfie corner" at Maker events - similar to designated corners of music festivals where visitors are encouraged to take photos with a fancy background or props. A couple of years ago, the Selfie House was a whole pop-up attraction in Zürich dedicated to this kind of thing:

We may also inspire ourselves with less superficial ideas, like the Selfie Wall by AGENCY - a critical data art installation:

While seemingly innocent personal records of private moments, selfies are in fact a new resource for third-party datacrunchers. Facial and pattern recognition software is able to extract identity and mood, while metadata embedded in the photo file, social network post protocols, mobile device settings, and user-generated content suggests each selfie leaves a significant ‘digital footprint’ which jeopardizes individual data privacy.

Selfie Wall description via Archinect

MakeSelfie

Federico and I warmly remember the selfie corner at the Mini Maker Faire in Zürich a few years ago, which featured giant cardboard tools as props for extra fun snaps of the family. Out of all this we cooked up the quick and fun "minimal" idea that you can see us presenting here:

Generally the process for participants of our event went like this: pick one of the 100 beautiful hand-made MakeZurich badges, install CircuitPython, then change the initial blinkenlights example code (thanks Urs for the tips!), and finally connect to The Things Network via LoRaWAN libraries (helpfully supported by Tillo and Yannick) to upload your data to the world's most surfable kind of cloud (okay, the Asperitas cloud looks very surfable, too - Pura Vida!). Once you get the hang of the basic process, pick an (Open) Challenge, and start sketching your ideas...

Getting the data you want from the badge is a question of working out which pins you are plugged into, going through the sensor specs if someone else hasn't already written a library, and then tackling the infinitely fun challenges of Digital Signal Processing. Here you can find some more details on my quick attempt to capture some biometrics:

The idea of MakeSelfie is furthermore to do something with the connection between your computer - we imagine this to be a fixed public kiosk for the selfie corner - and your MakeZurich badge.

First of all, we need to identify it - either by some unique ID in the firmware (Federico explored some options here) or just for the prototype throwing a print('<your_username>') in the serial output. Monitoring the serial console we can then generate a visual, based on a dribdat profile API data (e.g. api/loleg), and a quick webcam photo:

We used the forgiving PySimpleGUI and ImageIO to quickly whip up the screen above, vertical-flip bug and all. You can get the source code and learn more on our dribdat project page:

As a next step, we generate a GIF with some nice logos overlaid. We would like to then upload the result to our homecooked social platform - i.e. as a Dribdat activity. Then the complete solution can be based on open hardware, open data, and open source.

Further goals may be to actually visualize the Pulse Sensor data, along with the 'pulse' of our TTN gateway stats, or any other blips from our badge. In the future this may become a shareable, likeable, hexagonal feature of community events.

MakeSelfie could embed some critical discussion of data privacy, our network idealism, and the freedom-loving movement that we know as the Quantified Self. For now, there are just one or two more resistors to solder, a few hundred lines of code left to write/hallucinate. And lots of fun in store!

For further impressions of the event, check out #MakeZurich on Mastodon, Dribdat, Twitter and LinkedIn.

Thanks for reading. Comments welcome 8-)